I am a data scientist, driven by a passion for science, statistics, programming languages, and artificial intelligence.I have a curious mind and an analytical approach, I dive into complex datasets, unraveling hidden insights and unlocking the power of data. My proficiency in programming languages empowers you to develop innovative algorithms and models, revolutionizing the field of data science. My dedication to continuous learning keeps me at the forefront of technological advancements, fueling my love for exploring the frontiers of AI.

Data science is the interdisciplinary field that utilizes scientific methods, processes, algorithms, and systems to extract knowledge and insights from structured and unstructured data.

Data analysis involves examining, cleaning, transforming, and interpreting data to uncover patterns, draw meaningful conclusions, and support decision-making processes.

Machine learning is a field of artificial intelligence that focuses on developing algorithms and models capable of automatically learning and making predictions from data.

Data Scientist at @Tech

FAPESP.

Data Scientist at Well be Health Tech

Develop and implement ETL processes to extract, transform, and load healthcare data from diverse sources. Collaborate with software engineers to design and develop data-driven applications and platforms. Apply statistical analysis and machine learning techniques to derive insights from healthcare data. Ensure compliance with data privacy regulations and ethical considerations in handling sensitive healthcare data.

Data Scientist at Penguin Technologies

Develop and execute comprehensive testing strategies to ensure the quality and reliability of software applications and data-driven solutions. Develop and maintain test scripts and automated testing frameworks to streamline the testing process and enhance efficiency. Continuously monitor and evaluate the performance of software applications, identifying areas for enhancement and proposing data-driven solutions for optimization.

Machine Learning Engineer/Scrum Master at Artificial Intelligence HUB

I was responsible for facilitating the effective implementation of the Scrum framework in software development projects. I have facilitated communication and collaboration between the development team and stakeholders, ensuring transparency and a shared understanding of project goals, progress, and challenges. Also, I was responsible for designing, coding, and testing software applications based on defined requirements. I have actively participated in the full software development life cycle, ensuring code quality, adherence to coding standards, and the use of best practices to deliver robust and scalable software.

Doctorate's Degree in Nuclear Physics

A PhD in Nuclear Physics with a project in nuclear medicine, specifically focused on the dosimetry of alpha particle emitters, entails in-depth research into the precise measurement and calculation of radiation doses delivered by alpha-emitting radionuclides for medical applications. This research plays a crucial role in optimizing therapeutic radiation treatments for cancer and other diseases, enhancing the effectiveness of targeted alpha-particle therapies while minimizing potential harm to healthy tissues.

Specialization in Artificial Intelligence and Machine Learning

A graduate course with a focus on solving industrial sector problems equips students with specialized skills in applying AI and ML techniques to real-world industrial challenges. Through rigorous coursework and industry-specific case studies, students learn to develop innovative solutions that optimize manufacturing processes, enhance quality control, and drive operational efficiency.

Master's Degreee in Biomedical Engineering

A Master's Degree in Biomedical Engineering, with a project focused on the assessment of peak voltage accuracy and reproducibility, involves advanced studies in the field of biomedical engineering. The project specifically targets the evaluation of how accurately and consistently peak voltages are measured in biomedical equipment or devices.

Bachelor's Degree in Physics

A Bachelor's Degree in Physics with a project in the particle field encompasses a comprehensive study of fundamental physical principles, with a specific focus on particle physics. The project likely involves research or experimentation related to subatomic particles, their interactions, or the study of high-energy particle collisions

Hours Worked

Years of Experience

Happy Clients

Coffee Drinked

Proficient in applying statistical analysis, machine learning, and data mining techniques to extract insights and make data-driven decisions. Skilled in data preprocessing and feature engineering to optimize data for modeling. Experienced in developing and implementing predictive models, such as regression, classification, and clustering algorithms, to solve business problems. Proficient in programming languages, such as Python and SQL, for data manipulation, analysis, and model development. Familiar with deep learning frameworks, such as TensorFlow and PyTorch, for advanced modeling tasks. Experienced in data visualization to effectively communicate findings to non-technical stakeholders.

Proficient in designing and implementing scalable data architectures and pipelines to support efficient data storage, processing, and retrieval. Skilled in working with big data technologies, such as Hadoop and Spark, to handle large volumes of data. Experienced in data modeling, schema design, and database optimization for improved performance. Familiar with cloud-based data platforms, such as AWS, Azure, and GCP, and skilled in leveraging their services for data storage, computation, and analytics. Strong understanding of data warehousing concepts and ETL processes.

Big data refers to extremely large and complex datasets that exceed the capabilities of traditional data processing methods. It involves three main characteristics known as the three Vs: Volume (large data volume), Velocity (high data generation and processing speed), and Variety (diverse data types and sources). The goal of analyzing big data is to extract valuable insights, patterns, and trends that can inform decision-making, improve processes, and drive innovation. To handle big data effectively, organizations often use specialized technologies and tools, such as distributed computing, NoSQL databases, data lakes, and advanced analytics methods.

Proficient in extracting, transforming, and loading (ETL) data from various sources to ensure accuracy, consistency, and quality. Skilled in identifying and handling missing values, outliers, duplicates, and inconsistencies. Experienced in integrating and standardizing data from multiple sources to create a unified and reliable dataset for analysis. Proficient in using ETL tools and techniques to enhance data integrity and enable accurate reporting and decision-making.

With my background in Physics and Statistics, I have a solid foundation in data analysis techniques. I can efficiently extract meaningful insights from complex datasets, enabling me to make data-driven decisions. Also, my proficiency in Python and SQL further enhances my ability to query and manipulate data efficiently.

Machine Learning and AI: I have a strong foundation in machine learning techniques, with expertise in developing and deploying regression and classification models. My understanding of these models allows me to effectively solve real-world problems by predicting outcomes and classifying data. Additionally, my knowledge of Convolutional Neural Networks (CNN) indicates my competence in computer vision and image analysis tasks.

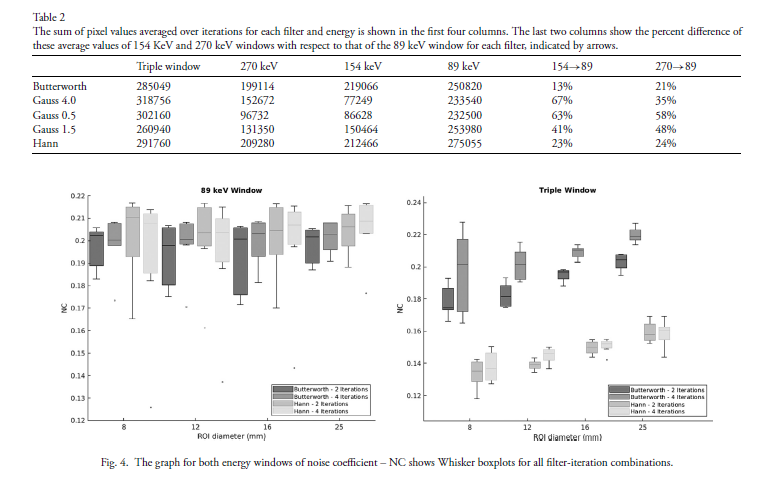

Source: Journal of Medical Imaging and Radiation Sciences 20 10

Abstract

223Radium dichloride image-based individual dosimetry requires an optimal acquisition and reconstruction protocol and proper image correction methods for theranostic applications. To assess this problem, radium-223 dichloride SPECT images were acquired from a Jaszczak simulator with a dual-headed gamma camera, LEHR collimator, 128 × 128 matrix, and total time of 32 minutes. A cylindrical PMMA phantom was used to calibrate the measurements performed with Jaszczak. The image quality parameters (noise coefficient, contrast, contrast-to-noise ratio and recovery coefficient) and septal penetration correction were calculated by MATLAB®. The best results for the investigated image quality parameters were obtained with an 89 keV energy window (24% wide) produced together with OSEM/MLEM reconstruction (8 subsets and 4 iterations) applying a Butterworth filter (order 10 and cutoff frequency of 0.48 cycles·cm−1).

Read more

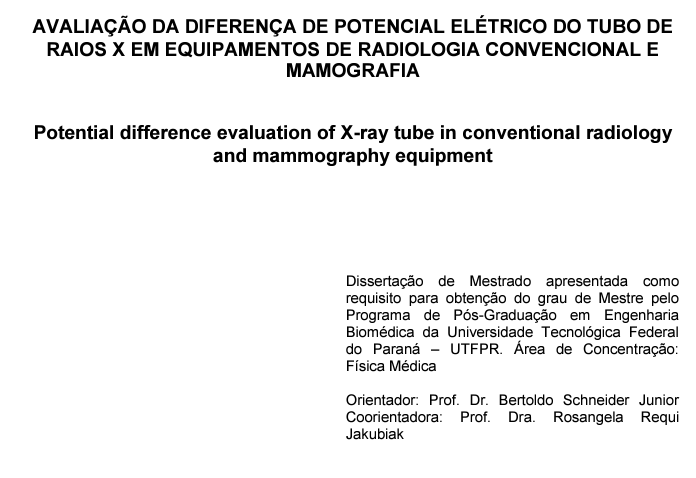

Source: UTFPR - Universidade Tecnológica Federal do Paraná 22 15

Master's Degree in Biomedical Engineering was achieved at Universidade Tecnológica Federal do Paraná

Abstract

The evaluation of the potential difference of X-ray equipment is a fundamental item in the quality control program of a radiodiagnosis clinic. This evaluation is important to ensure the patient’s radiological protection and to guarantee the quality of the exam image. The image quality is responsible for the correct diagnosis of the exam. National standards, Portaria 453/98 and the recent RDC 330/19, establish different operating limits for the voltage produced by the equipment’s X-ray tube and do not present a clear definition for the term voltage. The objective of this work was to evaluate the performance of three X-ray equipments and three mammographic equipments through the tests of accuracy and reproducibility established in the national standard.

Link to UTFPR Repository

Graduated Course in Artificial Intelligence applied in Industry.

Summary

Certification of a Graduate Course in Artificial Intelligence applied in the industrial sector in HUB of Artificial Intelligence at Senai-Londrina.

Read more

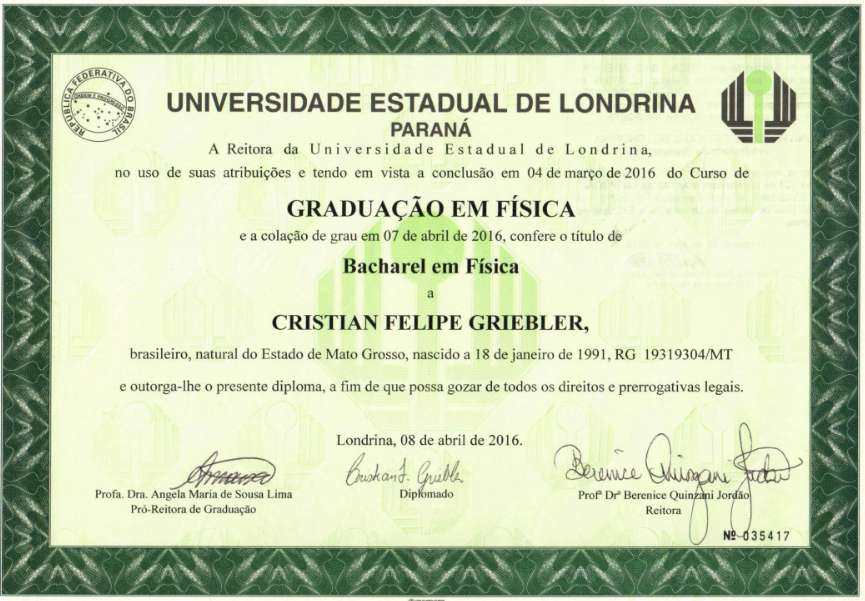

Source: UEL - Universidade Estadual de Londrina 4 2

Certification of a Bachelor's Degree in Physics.

Summary

Bachelor's Degree in Physics was achieved at Universidade Estadual de Londrina(UEL).

Read me more